Using Different Types of Notebook¶

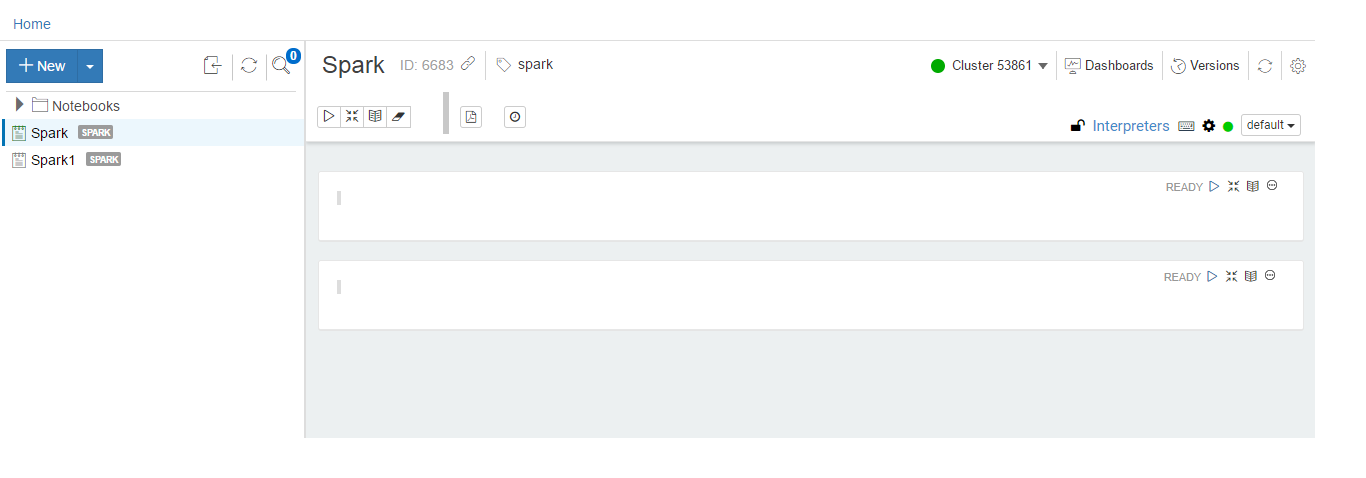

You can use a notebook only when its associated cluster is up. A sample notebook with its cluster up is as shown in the following figure.

Click the notebook in the left panel to view its details. The notebook’s ID, its type, and associated cluster are displayed.

Note

A pin is available at the bottom-left of the Notebooks UI. You can use it to hide/unhide the left side bar to toggle the notebooks’ list.

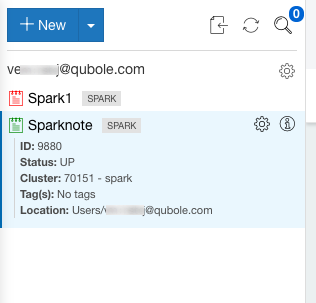

The following figure shows a notebook with its details displayed.

Using Folders in Notebooks explains how to use folders in the notebook.

You can run paragraphs in a notebook. After running paragraphs, you can export the results that are in a table format to a CSV (comma-separated value), TSV (tab-separated value), or raw format. Use these options by clicking the gear icon available in each paragraph (at the top-right corner). To download results:

- Click Download Results as CSV to get paragraph results in a CSV format.

- Click Download Results as TSV to get paragraph results in a TSV format.

- Click Download Raw Results to get paragraph results in a raw format.

Qubole provides code auto completion in the paragraphs and an ability to stream outputs/query results. Notebooks also provide improved dependencies management.

Currently, only Spark notebooks are supported. See Using a Spark Notebook.

Using a Spark Notebook¶

Select a Spark notebook from the list of notebooks and ensure that its assigned cluster is up to use it for running queries. See Running Spark Applications in Notebooks and Understanding Spark Notebooks and Interpreters for more information.

See Using the Angular Interpreter for more information.

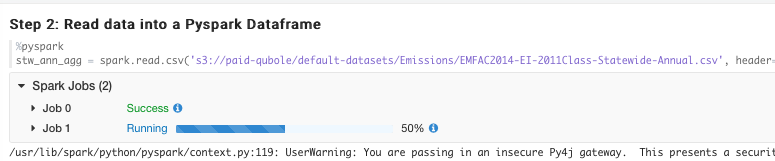

When you run paragraphs in a notebook, you can watch the progress of the job or jobs generated by each paragraph. The following figure shows a sample paragraph with progress of the jobs.

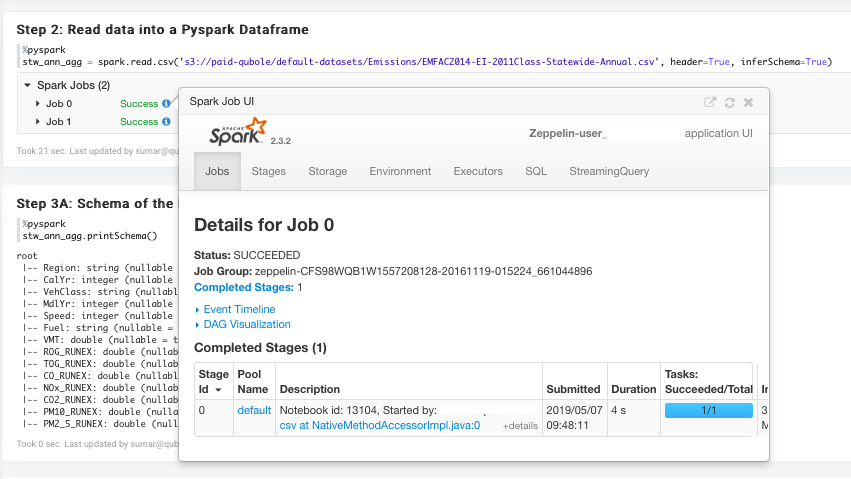

For more details about the job, click on the info icon adjacent to the job status in the paragraph, the Spark Application UI is displayed as shown below.

You can see the Spark Application UI in a specific notebook paragraph even when the associated cluster is down.